If you work in search or content strategy, you’ve felt the ground shift beneath your feet. Generative AI has made it possible for anyone to flood the internet with text, video, and images at near-zero marginal cost.

The old playbook of “find a keyword gap, crank out a blog post, sprinkle in backlinks” has been broken for a decade. The best teams that evolved 10+ years ago are seeing more wins now than ever as authority and differentiation become the factors.

Every member of the team must evolve and focus on engineering relevance. As Mike King, founder of iPullRank and SEO Week, elegantly describes:

“The future of search is great for the user and brutal for marketers. Users are getting better answers to their questions while ChatGPT, Google’s AI Overviews, and the rise of agentic browsing are rewriting the rules in real time. These systems don’t care about your rankings; they care about what content helps them answer the question best.

SEO as we know it is deprecated. Blue links are disappearing. Citations aren’t clicked on. Personalization and AI-driven synthesis are gatekeeping what gets seen.

Most SEO playbooks are relics from a link-driven world that’s rapidly vanishing. To top it off, there is a dearth of good information on what works and a lot of bad takes from previously trustworthy sources as the new best practices are being defined. If you're not engineering for relevance at the model level, structuring content for retrieval, reasoning, and response, you’re invisible. This isn’t about chasing keywords anymore. It’s about surviving in a search landscape where your page is just raw material for someone else's output.”

I’ve spent over 25 years building and analyzing search technology, co-founding MarketMuse, and more recently guiding Siteimprove’s product vision after our acquisition. From patenting semantic keyword analysis processes to being the first software platform to launch a quantified metric for Topical Authority (over eight years ago), you can bet on one thing: authority compounds. When you combine deep expertise with disciplined processes and ethical AI, you don’t just chase rankings; you set the agenda.

Here’s a quick overview of where search is headed, how Siteimprove is aligning to that future, and what practical steps you can take today to thrive in an age of infinite content. For a detailed explanation of how these principles extend into an autonomous, proactive framework, see our guide to Agentic Content Intelligence.

The infinite-content paradox

Content scarcity is gone forever

The economics of publishing flipped. A decade ago, quality content was scarce and expensive; now it’s abundant and mostly mediocre. When everyone can generate text, differentiation, not production, becomes the limiting factor.

Signal-to-noise and the trust bottleneck

Search engines can no longer rely on keyword frequency and counts of anything. They need richer signals of topical depth, originality, accessibility, and user satisfaction. That, in turn, means your entire site matters. Your architecture, accessibility, content quality/footprint, and brand governance now weigh on every query and every collection of queries that may be part of a “query fan out” process to achieve a synthesized response.

What does that mean? When you run a search on Google, the query is being analyzed and transformed into many queries that refine, round out a journey, and dig deeper into the intents that are predicted. You need to work harder to have pages performing for every intent represented by that query expansion process.

The outcomes of winning this way can be dramatic with inclusions in 10+ Search Result (SERP) features, AI Overviews and in controlling the narrative of language model output.

Topic cluster development, differentiated content, and full funnel coverage isn’t something that can be relegated to being a path to publishing “SEO content.”

It is the only thing that can keep you relevant.

Site-level authority isn’t a side quest; it’s the main campaign.

How search got smarter: From inverted indexes to neural networks

Classic information retrieval: keywords, TF-IDF, BM25

Early search engines of the 1990s–2000s relied on lexical matching, breaking queries into keywords and using an inverted index to fetch pages containing those terms. Documents were ranked with formulaic measures like TF-IDF or BM25, while Google’s PageRank layered in basic link analysis to estimate authority.

Because these systems matched text instead of meaning, they missed pages that used different wording and returned only the “10 blue links,” leaving users to hunt for answers themselves.

Search started simple: crawl documents, build an inverted index, rank by term frequency inverse document frequency. PageRank layered in link popularity, but relevance still hinged on lexical overlap: words on pages.

Keeping this literal, the document-centric past in view shows just how far modern, generative search has evolved, and hints at where it will head next.

Early use of AI in search

As machine learning and NLP entered the scene, search engines began interpreting context and intent rather than matching exact words. They learned that “NYC” is synonymous with “New York City” and could tell whether “Apple” meant fruit or a tech company from surrounding text.

Google’s RankBrain was the breakthrough, mapping unfamiliar queries to known topics with vector-based representations. The 2019 launch of BERT pushed this further, letting the engine grasp phrasing nuance and passage context almost conversationally.

Vector search soon became mainstream, embedding queries and content in high-dimensional space, so conceptually related information surfaced even when keywords differed.

Passage ranking drilled down to individual paragraphs, enabling a buried snippet to rank if it directly answers the question.

Today’s systems blend dense semantic vectors with sparse keyword signals in hybrid retrieval models, marrying neural understanding with lexical precision for unmatched relevance.

Semantic & neural IR

Around 2015, word-embedding models (word2vec, GloVe) proved that vectors capture meaning. Deep learning enables neural embeddings to map words, queries, and documents onto high-dimensional vectors where semantically related items cluster together.

Today, transformer encoders generate dense embeddings for queries, passages, and entities in real time.

Vector search lets Google return results for a query for “Mexican lager” that mention “Vienna malt,” “low-sulfur fermentation,” and “hops” without repeating the phrase. Passage ranking and neural re-ranking further refine those candidates.

Think about that pragmatically: Vector search exploits these embeddings to surface conceptually similar content. So, “When was Barack Obama born?” and “How old is the 44th president of the United States?” trigger the same answer, wiping out synonym blind spots.

This changed how content strategists and SEOs had to think to win.

Dense retrieval built on these vectors boosts recall well beyond BM25, yet it can miss niche terms or precise jargon that lexical models capture effortlessly.

Hybrid retrieval

No model is perfect. That’s why Google marries sparse (keyword) and dense (vector) retrieval, then uses transformers to re-rank. Hybrid engines preserve precision on rare terms while capturing semantic flexibility for paraphrases.

Because generating and comparing millions of long vectors is computationally heavy, pure vector search can be resource-hungry and slower for straightforward lookups.

Modern engines therefore play “alchemist,” mixing sparse keyword signals with dense vectors in hybrid pipelines that fetch, merge, and re-rank candidate results.

This fusion delivers the precision of keywords plus the semantic reach of vectors, yielding noticeably better relevance across both common and long-tail queries.

Even so, these systems still stop at retrieving and ranking passages, priming the landscape for generative AI, which moves from finding documents to composing answers.

Generative information retrieval & the rise of AI Overviews

Generative information retrieval is the leap from fetching documents to composing answers, transforming the search engine into an answer engine. It works through retrieval-augmented generation (RAG): The system first gathers the most relevant sources, then prompts a large language model to synthesize those facts into a single response.

Users see a cohesive explanation because embeddings and ranking still ground the output in real content, challenging the need to click away.

But that power raises the stakes for accuracy, bias, and attribution, demanding rigorous safeguards and evaluation.

Search has moved from locating information to composing it, and the next frontier is ensuring those AI-written answers remain correct and trustworthy.

Jonas Sickler, Sr. Market Insights Analyst, Search and Gen AI at Terakeet explains that:

"Search has always been a performance channel defined by rankings, traffic, and conversions. But generative AI is redefining search as a brand channel by infusing subjectivity and opinion into responses. Even non-brand queries now carry significant branded implications. As SEOs marketers, we must adopt new metrics that capture the full impact of generative search. Presence: is your brand visible for the topics that matter? Sentiment: is your brand recommended positively and enthusiastically? Accuracy: is information about your brand current, correct, and clear? Control: is your content being cited as a source for the response?"

RAG in a nutshell

Retrieval-Augmented Generation (RAG) pulls the most relevant passages from a hybrid index, feeds them into a large language model (LLM), and asks the LLM to write an answer with analyzed and validated citations.

Think of it as a “book-report” layer on top of search.

An orchestrator can route that prompt to a domain-specific or general-purpose LLM choosing one or several models if, say, a medical question demands extra rigor.

The chosen LLM weaves the retrieved snippets into a coherent draft answer, effectively transforming raw hits into readable prose. An attribution module then traces each claim back to its source and embeds citations, so users can audit the evidence. A confidence engine scores this draft, suppressing or flagging low-trust outputs before they surface.

The user ultimately sees a synthesized response, often enriched with inline links, imagery, or step-by-step instructions. This pipeline preserves the precision of keywords and the reach of vectors while layering on the fluency of generative AI.

Because that power can amplify errors or bias, continuous quality controls and evaluation loops are now mission critical.

How AI Overviews work

Retrieve → generate → align/cite

The system grounds the answer in the passages you just saw, adds attribution, and then runs safety filters. In March 2025, AI Overview frequency spiked in unexpected verticals, proving the feature was no longer experimental.

The magic happens when the query is analyzed and expanded into a collection of queries (behind the scenes) across a learning or buying journey. This elevates more candidate pages to consider when crafting the response.

This “Query Fan-Out” is the subject of a great deal of SEO research today and understanding it and how it can be applied to building content strategy will lead to the next breakthroughs in the SEO space.

Modern AI overviews start by retrieving a pool of candidate documents by combining all the queries in the ‘fan out’ and the original query and using both query-dependent factors (ex. text relevance) and query-independent factors (ex. site quality and authority).

An LLM then drafts an answer, but every token it emits is immediately checked against the source passages, anchoring each claim to something verifiable. The system favors facts repeated across multiple sources, marginalizing outliers unless they come from exceptionally trusted pages.

At the same time, it promotes diversity: A unique viewpoint or niche detail may be retained if it enriches the explanation, and the source has strong credibility.

Consensus and diversity signals jointly shape the final text, balancing reliability with breadth.

Once the answer is composed, a grounding layer maps each phrase back to its evidence and attaches clickable citations for transparency.

Frameworks like REALM (embedding retrieval), RETRO (token-level evidence injection), and RAW/RARR (claim checking and revision) automate these alignment loops.

This pipeline limits hallucinations by forcing the model to “show its work” before surfacing content to users.

For publishers, high-quality pages become more visible because authoritative sources are the first selected for summarization and attribution. The payoff of producing rich, corroborated information is that your content becomes the building block of the synthesized response.

AI Overviews combine rigorous retrieval, token-level alignment, consensus filtering, and explicit citations to generate answers that are both helpful and trustworthy.

For creators, it highlights the payoff of producing rich, corroborated information: your content becomes the building block of the synthesized response.

As Lily Ray, vice president, SEO Strategy & Research at Amsive, explains:

“Search is changing fast with the rise of AI Overviews, AI Mode, ChatGPT, Perplexity, and other LLMs. These platforms create more conversational, context-aware experiences that often keep users inside the LLM interface rather than sending traffic out to websites, which represents a major shift from traditional SEO.

On top of that, tracking performance in LLMs is harder, since outputs are dynamically generated based on real-time context, personalization, and retrieval-augmented generation (RAG), making some legacy SEO rank tracking tools less effective.

But SEO still matters. Visibility in AI search still relies on many SEO fundamentals: high-quality, authoritative content, clean technical architecture, and structured data. A lot of the approaches currently being branded as "GEO" or "AEO" just mean applying long-standing SEO practices to new formats - like targeting question-based queries, optimizing for entities, building trust signals, and using Schema markup (such as FAQ / Q&A Schema) to increase citation potential. Ignoring these essentials or chasing spammy shortcuts for the sake of AI visibility can hurt organic visibility and even brand credibility.

As LLMs evolve, their training and alignment processes are becoming more robust against injection and poisoning attacks, making spammy shortcuts far less effective and easier to detect over time. As with all things SEO, playing the long game will prove to be most effective over time. Focus on building an authoritative, respected brand known for providing high-quality, original, trustworthy content, leveraging multiple platforms and modes of content to convey your message.”

Generative search rewards authoritative clarity.

Gemini innovations & deep search

At I/O 2025, Google unveiled technology to power deeper, multimodal queries (“Plan a three-day gluten-free brewery tour in Oregon with kid-friendly stops”). AI Mode can answer, show a dynamic itinerary, and even generate a custom map without a single click.

Where is this headed? From Garrett Sussman, host of The SEO Weekly:

“You've got 6-12 months before we're all forced to adjust to a default AI Mode on Google. In the short term, search behavior will change when people are confronted with a fundamentally different way to search. Long-term, search will be emulsified into AI assistants. The hope is that, regardless of the conversation search platform you target, the highest-quality brands and content will be rewarded for their excellence. There will be growing pains. But the best relevance engineers will be able to elevate the value of their skill sets and become one of the most important marketers in their business.”

Trust, authority & bias: The new ranking battlefield

Understanding the mechanics of RAG is only part of the battle. The critical question for strategists is: on what basis does this new system decide which content to retrieve and cite? The answer lies in a new set of methods for analyzing text and content.

AI doesn’t eliminate bias; it can magnify it. During UK antitrust probes in June 2025, regulators cited concerns that AI-driven SERP features unfairly favored Google properties.

For publishers, that means:

- Factual grounding: Back every claim with primary data and clear exhibitions of knowledge.

- Perspective diversity: Demonstrate you’ve covered multiple viewpoints; consensus is a filter, but diversity wins complex queries. A clear point of view wins when it is legitimate, clear and differentiated.

- Accessibility parity: Alt-text, contrast ratios, and screen-reader compliance are no longer “nice to have”; they’re machine-read signals of care.

Siteimprove: Building authoritative content intelligence

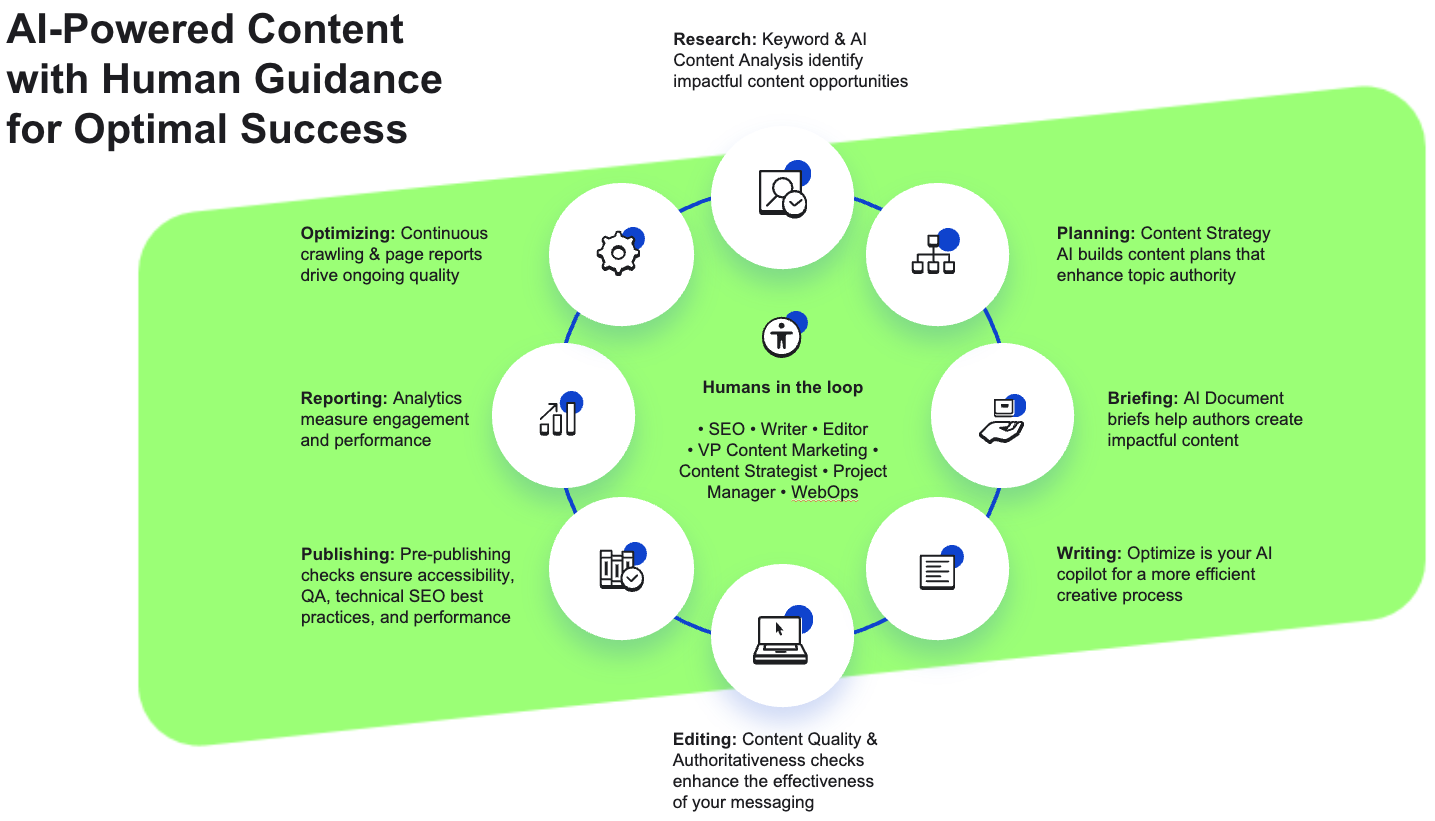

When Siteimprove acquired MarketMuse, we set a shared goal: creating AI solutions and agents that handle planning, briefing, writing, auditing, and monitoring while keeping humans in the loop.

This is the future of content intelligence.

Picture this pipeline:

|

Stage |

Agentic Power |

Human Intervention |

|

Research |

AI scans SERP, vector DBs, and your own corpus to find opportunity gaps |

Strategist refines business relevance |

|

Planning |

Auto-clusters keywords by intent & authority delta |

SEO lead approves roadmap |

|

Briefing |

Generates outline, internal links, customization needs to differentiate |

Editor adjusts POV & narrative |

|

Drafting |

Assisting AI writes with a human-in-the-loop, enforcing brand tone , getting more refined over time and use |

SME validates and adds expertise |

|

QA & Governance |

Accessibility, performance, and checks via intelligent crawler |

Team fixes flagged issues |

|

Performance Insight |

Unified analytics dashboards and conversational analytics |

Content leads reallocate budget |

The result: Compounding authority and the ability to adopt human-in-the-loop AI for any team.

Practical playbook: Future-proof SEO & content strategy

Theory is valuable, but strategy lives in execution. The principles of Content Intelligence are not abstract. They translate directly into a modern playbook designed for a retrieval-first world. Here are the most critical operational shifts for any content team aiming to build durable authority.

These aren't just refreshed best practices.

They’re targeted optimizations for an era where success means being retrieved, cited, and trusted by both users and the AI that serves them.

Own the knowledge graph

- Map entities and relationships inside your niche.

- Learn how to assess your existing site and convert unstructured content to knowledge graphs and semantic relationships.

- Build cornerstone content that comprehensively covers each cluster and differentiates with a unique point of view.

- Make sure everything you do is accessible and succeeds with machines in mind.

Optimize for retrieval-first, generation-second

- Dense coverage of subtopics will improve vector recall.

- Clear, concise passages are ideal materials for language model summarization.

- Win across multiple related queries covering the funnel and the “fan-out”.

- Achieve attribution throughout the AI Overview and control the narrative.

Measure beyond blue links

- Understand the impact of every SERP Feature in your results.

- Correlate passthrough traffic with brand search lift to capture latent demand.

Double-down on accessibility & performance

- Google publicly ties page experience and trust to ranking, even within AI features.

- Use Siteimprove’s unified scores to prioritize fixes that amplify both UX and SEO.

One checklist you can use now

Executing the playbook requires a new kind of editorial rigor. You need one that balances AI-driven scale with human-led quality control. I’ve put together a checklist to provide that governance. It’s a framework to manage the entire AI-assisted editorial system effectively. It will help put the 'authority' into Authoritative Intelligence.

Modern content must satisfy two audiences, humans and the AI systems that increasingly surface, summarize, and rank information. The best way to win with both is to optimize for real users first: craft a holistic narrative that threads through every stage of the buyer journey, map competitive gaps, and ground the work in clear evaluation rubrics that spell out what differentiation and expertise means for your brand. Throughout, preserve context and voice so the material feels authentic even when AI tools lend a hand.

Quality, therefore, hinges on a disciplined process. Adopt a tiered standard that distinguishes where pixel-perfect polish is essential and where speed is most important. Start developmental editing early to lock in structure and point of view. Blend AI insights with human judgment, leaning on refined models, but always fact-check and enforce rigorous copy edits, version control, semantic optimization, and continuous performance monitoring. Above all, begin earlier: shape tone, structure, and POV before a single draft is generated so every stakeholder can collaborate around a shared, strategic blueprint.

Here’s a checklist to ensure AI adoption is a success:

- Point of view: Clearly define and maintain a unique perspective or opinion throughout your content to differentiate from generic or AI-driven content.

- Expertise: Feature and validate subject-matter expertise to reinforce trustworthiness and demonstrate authority.

- Personal experience: Regularly integrate authentic, firsthand experiences and original examples into content to enhance credibility and relatability.

- Style: Ensure consistency in voice, presentation, and formatting guidelines aligned to brand identity and editorial standards.

- Tone: Tailor tone precisely to audience expectations, context, and content purpose, ranging appropriately from formal to conversational.

- Proof statements: Include compelling evidence and proof points to substantiate key claims, building audience trust and engagement.

- Fact statements: Explicitly verify and cite all factual claims, maintaining a rigorous approach to accuracy and credibility.

- Target market and ICP: Continuously reference clearly defined Ideal Customer Profiles (ICP) and target market segments during content creation and refinement.

- Intent details: Clearly articulate the intended user intent for every content piece, matching user expectations and search motivations accurately.

- Structural differentiation: Adopt clear and unique content structures or formats that differentiate your content visually and contextually from competitors.

- Personalization detail: Incorporate detailed personalization elements, ensuring your content resonates emotionally and contextually with intended readers.

AI editorial excellence framework

Start earlier. Don’t just patch drafts after the fact. Up-front planning, clear point of view, and a Human-in-the-Loop (HITL) checklist turn “good enough” to “standout.” Inject your unique lens, genuine expertise, and brand-aligned tone; back every claim with verifiable proof; and match structure, voice, and intent to your ideal customer’s motivations. In short: you can’t edit your way into excellence—you must plan for it.

Smart software makes discipline scalable.

Siteimprove maps the roadmap to topical authority by revealing coverage gaps, depth opportunities, and the signals AI models trust. Siteimprove safeguards everything post-publication, enforcing readability, SEO integrity, and technical health as search behaviors shift toward AI Overviews. Siteimprove sets the direction and locks in performance, keeping your content aligned with evolving AI ecosystems while sustaining editorial excellence end to end.

Final thought: Lead with authoritative content intelligence

AI can’t manufacture genuine expertise or lived experience, but it can expose mediocrity at scale. If you cultivate authoritative depth, integrate a point of view, ensure accessibility into every template, and let AI agents handle the manual labor under human supervision, the next decade of search looks less like a threat and more like the biggest growth canvas we’ve ever seen.