If website accessibility is part of your work, chances are you rely on automated testing. Click a button and let it do its thing, right?

But have you ever wondered what goes into creating the rules that govern accessibility testing? Or who makes the rules?

As co-chair of the Accessibility Conformance Testing Rules Community Group (or as we call it, ACT-R), I can tell you that the rules don’t come out of nowhere. Each one takes hours to write, review, and tinker with, by multiple people, often over the course of days. By my estimate, development of each new testing rule takes a total of about 50-200 hours and requires the expertise of 4-5 people.

Speaking for myself, I dedicate a day per week to reviewing and writing rules, hosting a bi-monthly call with the group, plus an additional time each week syncing with my co-chair, Carlos Duarte, an expert in Human-Computer Interaction (HCI) from the University of Lisbon.

It does take a lot of time, but it’s also incredibly gratifying: Reliable testing rules are key to detecting failures and prevent false positives (alerts that there’s a problem when there really isn’t), which means there are digital barriers to people with disabilities, and they go a long way toward saving time for web ops experts. A win for inclusivity and business!

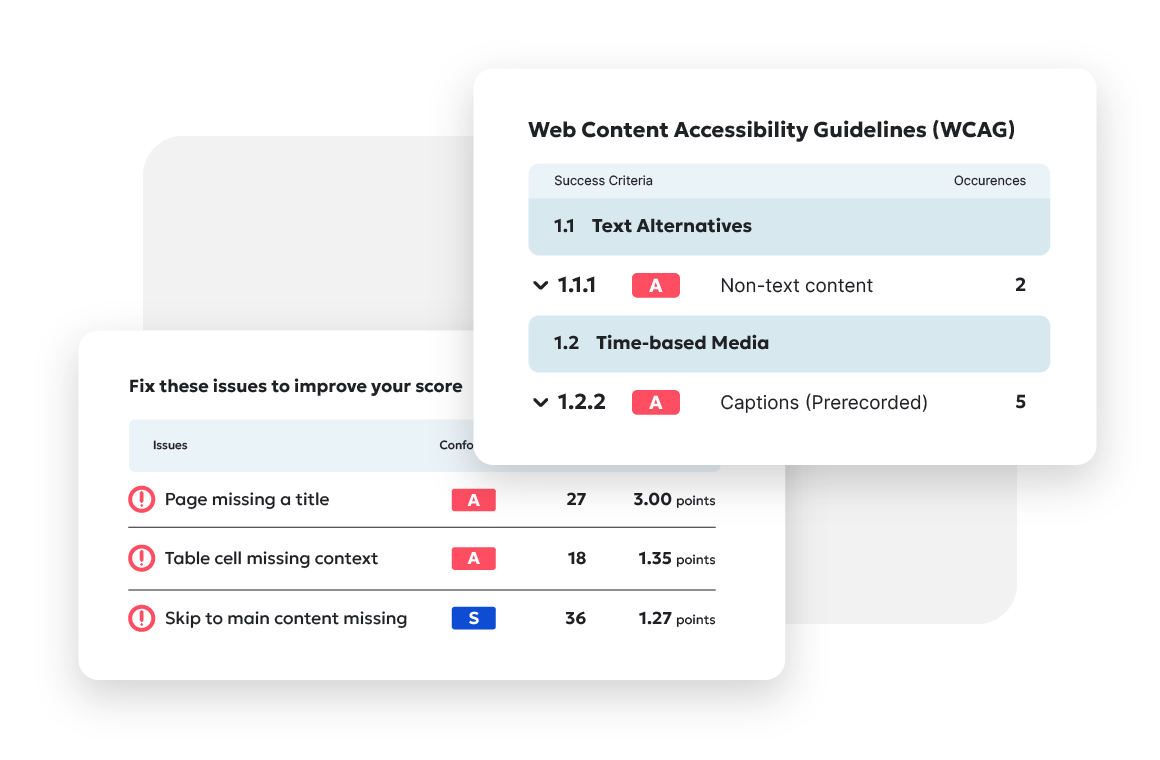

Lately I’ve been deep in the weeds of WCAG 2.2, the latest version of the Web Content Accessibility Guidelines, which was released in October 2023 and requires hard work from accessibility experts like me, Carlos, and others to pore over the success criteria and do our part to develop the testing rules that uncover issues with meeting those criteria.

Why ACT exists

Right out of the gate, it’s important to know that people can interpret WCAG criteria differently, and that’s why Accessibility Conformance Testing exists. For example, ambiguity in the success criterion WCAG 1.4.3 for color contrast, which ensures that there’s sufficient contrast between the text and the background colors so people with color vision deficits can read the text, can lead to designers to calculate contrast ratios differently; this inconsistency naturally creates problems for users who are colorblind.

ACT ensures that we all agree on the same methods to assess if a criterion, such as color contrast, is met or not.

How a new accessibility testing rule gets made

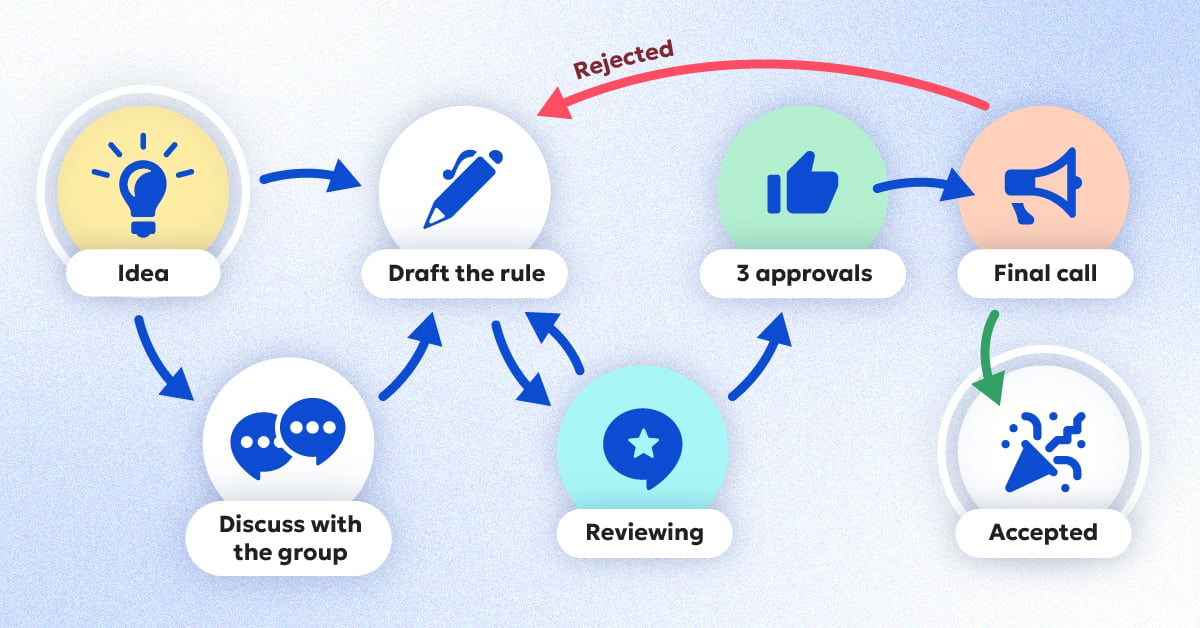

So, how are new testing rules created? And how does everyone in the ACT-R Community Group agree on a rule?

It’s typically a matter of intensive review, tinkering, and building consensus. Once anyone from this group drafts a rule, three participants from the group who come from organizations ranging from Siteimprove or other accessibility testing providers like Deque to companies as large as IBM, CVS, and Airbnb adjust, tinker, debate, agree, and disagree before arriving at consensus and moving it along to WCAG for final approval.

Along the way, anyone else in the group beyond those three can jump in with their feedback and sniff out any problems with it. If one of the participants does find an issue, the rule goes back to the start of the process.

Once WCAG approves the rule, it’s published on the the WCAG page.

What we look for

These WCAG-approved testing rules form the core for automated accessibility checks, including the ones we use in the Siteimprove platform, and we take great care in making sure that the rules are robust and reliable because there are certainly scenarios in which automated testing fails: It doesn’t flag issues that should be flagged.

There are two main blockers the group considers when assessing if a success criterion can’t be automated:

- Heading off false positives: We can easily detect some valid patterns but can't ensure the page doesn't implement a more tricky but still valid pattern. So, we can never be 100% sure of a failure (automatically), and thus do not want a rule with a lot of false positives.

- Valid reproduction: Sometimes criterion simply can’t be tested by software because the actions aren’t ones that can be reproduced. For example, an automated tool can’t mimic dragging and dropping with a mouse.

False positives are our primary concern: If a test produces too many false positives, we can’t in good conscience recommend automated testing. After all, reviewing content issues that don’t actually exist wastes enormous amounts of time, including for our customers. (Just to give you an idea of how needlessly messy it can get, I’ve seen a single faulty testing rule create tens of millions of false positives across our customer base. Obviously, we were quick to fix it in an update). o

After the latest WCAG 2.2 release, we gave the green light to automating just one new testing rule, because there was only one that the ACT-R Community Group determined could produce adequate results.

Unfortunately, some other companies that offer accessibility testing claim that their tools automate more rules than is actually possible from ACT’s collective point of view. It might seem harmless enough, but this practice inevitably yields poor results that create unnecessary work, and it risks souring teams against accessibility in general, which is never a good thing.

It’s not unlike the buyer beware situation with claims that accessibility overlays can magically make websites compliant: Those might promise the world, but companies that use them are still at risk of being sued.

The accessibility community acknowledges that only about one-third of WCAG success criteria can be tested automatically. All the other criteria require some level of manual testing by a person who knows what to look for and what disabilities to replicate.

How to avoid shortcuts that short-circuit

Automated testing certainly saves a lot of time and money, when it’s appropriate, but if you rely on it too much, you won’t be protected from all compliance issues, nor will you be assured of providing all your users with an accessible experience.

Your best bet is to go with a vendor that puts its money where its mouth is by being active in the accessibility testing community. Also, be sure to ask questions about how aligned the vendor’s testing engine is with ACT rules and note that W3C, the governing body for WCAG, provides implementation reports that check different testing engines against implementation of ACT rules. (Because Siteimprove’s testing engine, Alfa, is open source, you can easily see what we’ve implemented.)

One of the best ways to zero in on the top vendors is to take a look at reports like The Forrester Wave: Digital Accessibility Platforms, which assesses vendors based on a number of criteria, including their involvement with the community.

If your web accessibility vendor doesn’t follow ACT rules, you run the risk of inconsistent testing that’s not in line with the W3C industry standard of accessibility testing, which in turn puts you at risk of running afoul of requirements like those of the ADA.

It’s not, however, just a matter of making sure you’re following the rules: It’s a matter of making sure that at every digital juncture, you’re truly serving — not alienating — the people who the rules were made to protect and include.

Ready to create more accessible and inclusive web content?

Siteimprove Accessibility can help you create an inclusive digital presence for all.

Request a demo