Should We Prioritize LLM Traffic? Start by Valuing It.

Brands don't need perfect visibility into large language models to make wise decisions. First, quantify the business value of current LLM traffic using a strict Siteimprove read; then allocate resources accordingly. Prioritize LLM only if the measured prize is real.

Before chasing "LLM SEO," prove the channel's value with the help of Siteimprove's AI Visibility dashboard. In this article, we'll:

- Use a Siteimprove dashboard to pull the necessary snapshot.

- Quantify attributable clicks, journey presence, and downstream lift.

- Apply conservative thresholds to decide: prioritize, monitor, or deprioritize.

- If greenlit, run a tight 60-day plan; otherwise, reallocate to higher-yield work.

First, let's define what counts as LLM traffic today.

Define the playing field: What counts as LLM traffic (today)

LLM traffic splits into attributable clicks, assisted influence, and zero-click exposure, excluding bots.

It's essential to understand these buckets because your dashboard, thresholds, and decisions depend on them. Here's a brief overview of what each one entails:

- Attributable LLM: Human visits from answer engines that appear in referrers and landing pages.

- Assisted influence: Journeys first exposed via LLM answers that appear in upstream steps and convert later.

- Zero-click exposure: Brand or pages cited in answers without a click, reflected in branded/direct lift and support deflection.

- Exclusions (bots): Non-human fetches removed via filters to keep the cohort clean.

Note that references to LLM referrals include ChatGPT, Gemini, Perplexity, and other similar systems. Not Google's AI Overview.

The 30-Minute Siteimprove snapshot (Use this dashboard)

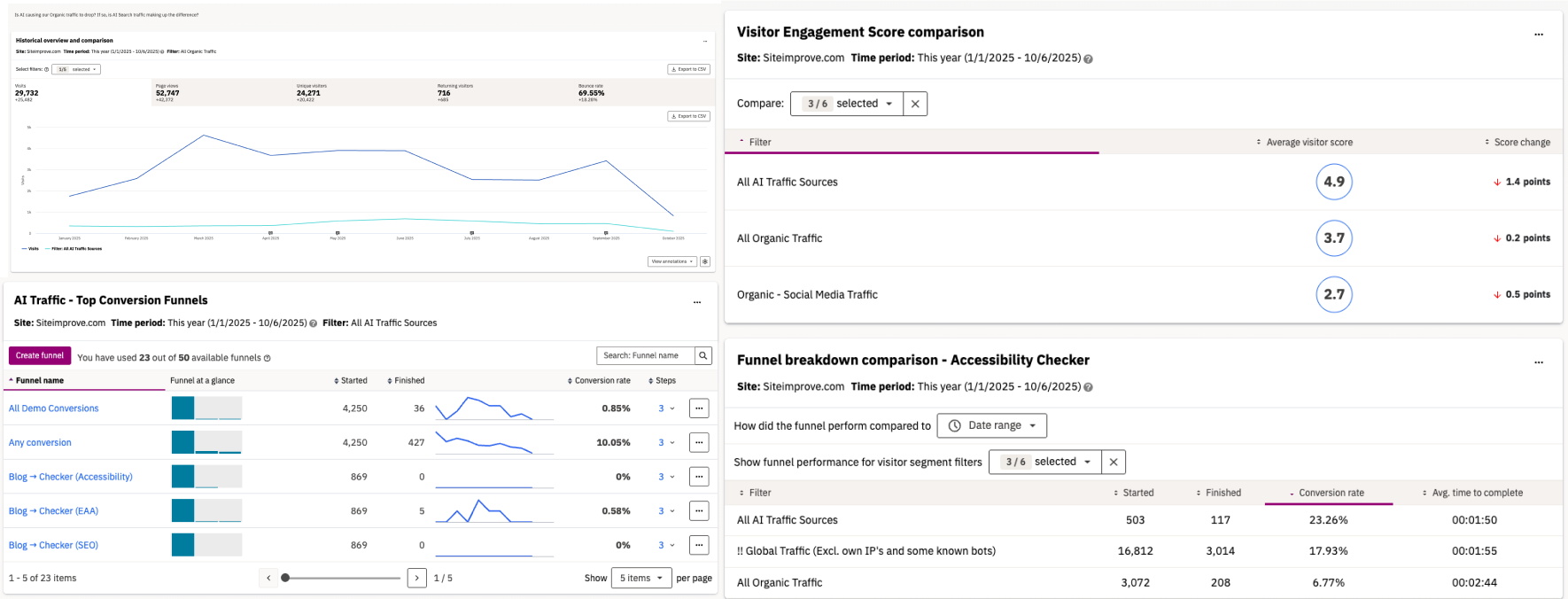

A single Siteimprove dashboard delivers a fast value read using a strict LLM segment across key widgets. If LLM sessions are a small and unstable share, deprioritize them. If they are meaningful and steady, proceed.

Here are the questions you need to be asking.

- Is there volume worth caring about? Additionally, we can determine whether the pattern is stable enough to bet on and whether the momentum is genuine and not merely noise.

- Is the LLM cohort engaged and present in the journey? The visitor engagement score comparison indicates whether that cohort passes a quality bar. AI Traffic — Top Conversion Funnels reveals assisted behavior (present upstream, not last click).

- Is there downstream lift that merits prioritization? A comparison of funnel breakdowns between AI traffic and traditional organic search traffic reveals that LLM traffic is directing users into conversion flows.

Decision thresholds (the "answer")

Conservative 28-day thresholds provide a clear prioritization, monitoring, and deprioritization decision.

Here's a simple one-minute rule to help you come to that decision. If you can answer yes to 2 or more of these questions, prioritize; otherwise, don't.

- Is LLM traffic meaningful?

Look at LLM Volume Share. Yes, if it's 2% or more of organic sessions.

(If unsure, round down.) - Are those visitors moving toward pricing/demo?

Check Downstream Lift from LLM landings to pricing/demo pages. Yes, if ≥ +15% last 28 days. - Are we ready to benefit?

Glance at Readiness (SEO/Accessibility/Performance on target pages). Yes, if issues are trending down and core vitals are passing.

For those who prefer working with ranges:

- Green (prioritize): Volume ≥ 2% and Downstream ≥ +15% and Readiness pass.

- Yellow (monitor): Volume 0.5–2% or Downstream 0–15% or Readiness mixed.

- Red (deprioritize): Volume < 0.5% and Downstream ≤ 0% or Readiness blocked.

If it's red or yellow: Deprioritize playbook

When signals are weak, focus readiness on top pages and reallocate effort to higher-yield programs. Monitor on an occasional basis.

If the snapshot says the prize is small, don't force it. Tighten the foundation on your top three "LLM-magnet" pages (you can see these in the dashboard, too). That means clean HTML hierarchy, fast LCP, accessible components, explicit definitions, comparison tables, and basic schema. These fixes improve every channel, not just answer engines, and they're fast to ship.

Then move the budget where it converts. Upgrade pricing/demo UX (clarify tiers, reduce form friction, add proof), publish bottom-funnel content that sales actually uses (battlecards, ROI calculators, implementation guides), and run partner co-marketing that taps existing demand. Sales enablement beats speculative "LLM SEO" when the cohort is tiny.

Keep a light hand on the dial. Check the LLM segment weekly for trend shifts, annotate any releases, and schedule a 90-day review. If the numbers cross your thresholds later, you'll have a clean base and a clear signal to re-enter with intent.

Establish a monthly governance check (issues closed, schema valid, accessibility passes) and a one-change–one-annotation experiment rule. That keeps your read clean and repeatable.

If it's green: Tight 60-day LLM plan

Thesis: When signals are strong, ship citation-ready content, deepen entities on winners, run controlled UTMs, and close top issues.

Green means you have momentum—cash it in with assets that answer engines can quote cleanly and users can act on. Start by refactoring the three to five winning pages into citation-ready formats. Audit answer quality on these pages: concise TL;DR, evidence density, clear sources, and structured comparisons.

Clarity is key here. Lead with a TL;DR that states the answer in two to three sentences, add definition callouts for key terms, use comparison matrices where choices exist, and cite primary sources at the point of claim. This structure invites inclusion in answers and shortens time-to-trust for human readers.

Next, deepen the substance where it already performs. Run an entity depth pass on those same winners using MarketMuse to fill missing concepts, synonyms, and relationships. Resist the urge to boil the ocean; expand only the pages that already attract LLM traffic and mid-journey presence. The goal is density, not sprawl.

Instrument the improvement so you can demonstrate its effectiveness. Add one clearly labeled, UTM-tagged link inside each page's TL;DR box (e.g., "See pricing"), annotate the change in your dashboard, and track the downstream lift to conversion pages from LLM-landed sessions. Keep the experiment clean: one new link per page, one annotation per change, and a 28-day read before you call it.

In parallel, close the gaps that block inclusion and UX. Tackle tech hygiene on those pages—fix accessibility violations, tighten heading hierarchy, compress assets for faster LCP, and ensure schema is valid and minimal. These are high-leverage changes that compound across channels and reduce friction in every journey.

Hold yourself to an outcome, not an activity. Your success metric is straightforward: increase conversion completion rates from LLM-exposed journeys by 10–20% within 60 days. If the cohort grows but completion doesn't, revisit the TL;DR offer, CTA placement, or form friction before adding new pages.

What you can and cannot know

Siteimprove reveals journeys, behavior, and readiness; total LLM coverage and exact assisted attribution remain outside the scope.

To keep decisions clean, separate evidence you can measure from signals you can only infer.

You can know (from Siteimprove):

- Attributable clicks: Human sessions from detectable answer-engine referrers.

- Cohort behavior: Engagement on LLM landing pages (scroll, clicks, next-page rate).

- Journey presence: LLM cohort appearance in early/mid funnel steps vs. completion.

- Readiness issues: SEO, accessibility, and performance status on target pages.

You cannot know (yet):

- Full answer-engine coverage: Not every LLM exposes referrers or shows you every citation. The best you can get today is an inferred (theoretical) coverage model, not ground-truth coverage.

- Exact assisted attribution: Formal "assisted vs. last-click" requires GA4 or external modeling.

- Precise zero-click value: Brand impact without clicks remains proxy- You can't measure it directly, so you use proxies—signals that move when that exposure matters.

Here are some proxies to watch:

- Branded search lift: More people search your brand name after you start appearing in answers.

- Direct traffic lift: More visits come straight to your site (typed URL/bookmark).

- Help-center deflection: More views of help/docs with fewer tickets submitted.

- Sales cues (optional): More "heard about you in Copilot/Perplexity" mentions in forms or calls.

Conclusion

Prioritize LLM traffic only when the measured value clears conservative thresholds. Otherwise, invest elsewhere and monitor.

You don't need perfect visibility to make a smart call. You need a disciplined read and the courage to say no. Start with a strict LLM segment and a 28-day snapshot in Siteimprove, then answer the three yes/no questions. Is volume meaningful? Are visitors moving toward pricing/demo? Are you operationally ready? If you answer 'yes' to two or more questions, prioritize. Otherwise, don't.

This post gave you the fast lane: the dashboard questions to ask, the plain-English thresholds, and the two forks in the road. If the data flags Red/Yellow, shore up your top pages and redeploy effort to higher-yield programs while you keep a light weekly watch.

If it's Green, run the tight 60-day plan: ship citation-ready pages, deepen entities where you're already winning, tag one clean UTM in the TL;DR, and close the top technical issues—then judge success on conversion completion, not activity.

Be explicit about what's knowable and what's not. You can measure attributable clicks, cohort behavior, journey presence, and readiness. You cannot claim full engine coverage, perfect assisted attribution, or precise zero-click value. Those are inference models, supported by conservative proxies and dated annotations.